If you’re working with compressed indexes, SQL Server provides a system stored procedure to help test the space savings of implementing data compression: sp_estimate_data_compression_savings. Starting in SQL Server 2019, it can even be used to estimate savings with columnstore.

I really don’t like sp_estimate_data_compression_savings. In fact, I kind of hate it. It’s not always very accurate–and even when it is accurate, the results can be misleading. Before I get ranty about why I don’t like it, let’s look at it in action.

First, some data to play with

We’ll create a fresh new database, with a fresh table, and pump it full of data:

USE master

-- Create a DB to play in

DROP DATABASE IF EXISTS CompressionTest;

GO

CREATE DATABASE CompressionTest;

ALTER DATABASE CompressionTest

SET RECOVERY SIMPLE;

GO

USE CompressionTest

GO

CREATE TABLE dbo.ReallyBigTable (

ReallyBigTableID bigint identity(1,1),

StoreID tinyint,

CustomerID int,

ProductID int,

TransactionTypeID int,

CONSTRAINT PK_ReallyBigTable PRIMARY KEY CLUSTERED (ReallyBigTableID)

);

GO

INSERT INTO dbo.ReallyBigTable (StoreID, CustomerID, ProductID, TransactionTypeID)

SELECT StoreID = c1.column_id,

CustomerID = c1.object_id,

ProductID = c2.object_id%10000,

TransactionTypeID = c2.system_type_id

FROM sys.columns AS c1

CROSS JOIN sys.columns AS c2;

GO 100

Estimate the space savings now

First, let’s take a look at the size of the table:

EXEC sp_spaceused ReallyBigTable;

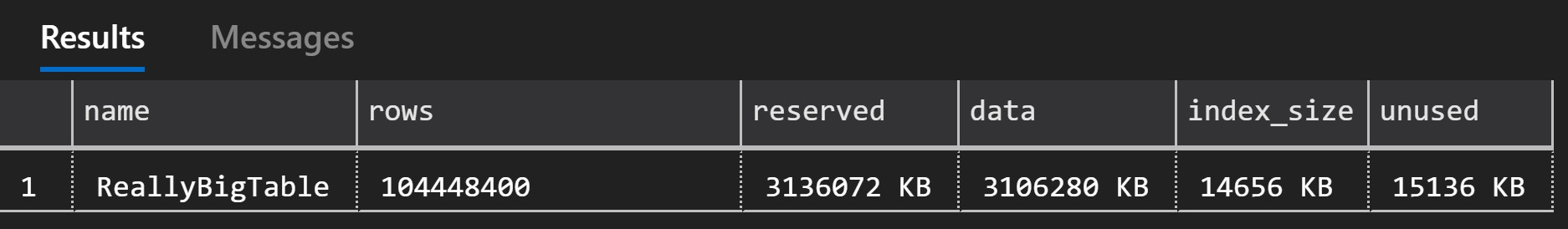

The table is 104 million rows, and 2.99 GB.

Now, let’s take a look at what sp_estimate_data_compression_savings estimates for space savings with different levels of data compression:

EXEC sp_estimate_data_compression_savings 'dbo','ReallyBigTable',NULL,NULL,'NONE'; EXEC sp_estimate_data_compression_savings 'dbo','ReallyBigTable',NULL,NULL,'ROW'; EXEC sp_estimate_data_compression_savings 'dbo','ReallyBigTable',NULL,NULL,'PAGE';

A selection from the results estimate the size as thus:

| Compression Level | Size with current compression (KB) |

Estimated size with new compression (KB) |

Estimated savings |

|---|---|---|---|

| NONE | 3120936 | 3107200 | 0.44% |

| ROW | 3120936 | 3120936 | 0.00% |

| PAGE | 3120936 | 1364376 | 56.28% |

I’m not going to dispute any of those numbers….at least not right now. This table is a tidy little thing that was freshly built with very full pages… What happens if we play around with the data some?

SET NOCOUNT ON;

SELECT 1;

WHILE @@ROWCOUNT > 0

BEGIN

DELETE TOP (1000000) rbt

FROM dbo.ReallyBigTable AS rbt

WHERE ReallyBigTableID%2 = 0;

END;

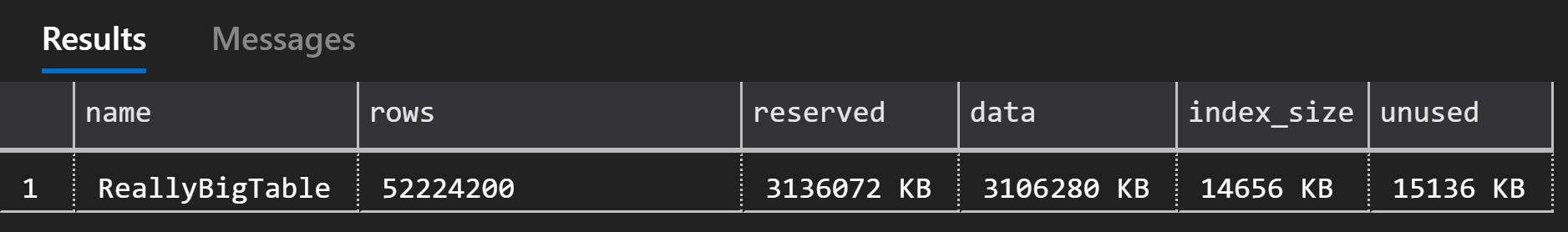

I’ve essentially just deleted half the rows by deleting everything with an even ID number. This is a good way to simulate low page density. (In real life, you’d get here through “normal” means of page splits and whatnot.) If we look at the size of the table now:

EXEC sp_spaceused ReallyBigTable;

We can see that the number of rows is half what it was at the start (as intended), but the size hasn’t changed any. Fewer rows in the same amount of space indicates we’ve got low fill on these pages. We can confirm that by looking at the index stats. This query confirms we’re at 49.8% page fullness:

SELECT avg_page_space_used_in_percent

FROM sys.dm_db_index_physical_stats

(DB_ID(), OBJECT_ID(N'dbo.ReallyBigTable'), NULL, NULL , 'DETAILED')

WHERE index_level = 0;

OK, now that I’ve messed with the page fullness, let’s look at the index compression estimates again. This gives a better “real life” scenario of running this procedure on Prod, where your indexes are likely not all tidy, freshly rebuilt and perfect:

EXEC sp_estimate_data_compression_savings 'dbo','ReallyBigTable',NULL,NULL,'NONE'; EXEC sp_estimate_data_compression_savings 'dbo','ReallyBigTable',NULL,NULL,'ROW'; EXEC sp_estimate_data_compression_savings 'dbo','ReallyBigTable',NULL,NULL,'PAGE';

| Compression Level | Size with current compression (KB) |

Estimated size with new compression (KB) |

Estimated savings |

|---|---|---|---|

| NONE | 3120936 | 1547360 | 50.42% |

| ROW | 3120936 | 1711280 | 45.17% |

| PAGE | 3120936 | 1452264 | 53.47% |

Now these numbers look pretty odd. There are a few odd things here:

- It looks like all three compression levels will save about the same amount of space.

- With half as many rows, page compression estimates are larger than the prior estimate for the full sized table.

- The table currently has no compression, but estimating for NONE shows significant space savings

I don’t trust those estimates

Just like I don’t fully trust estimated execution plans, and I don’t trust estimated compression savings. I want to see the actual results. Let’s rebuild my table with each compression level, and see what the size is after each rebuild:

ALTER INDEX PK_ReallyBigTable ON dbo.ReallyBigTable REBUILD WITH (DATA_COMPRESSION=NONE); EXEC sp_spaceused ReallyBigTable; ALTER INDEX PK_ReallyBigTable ON dbo.ReallyBigTable REBUILD WITH (DATA_COMPRESSION=ROW); EXEC sp_spaceused ReallyBigTable; ALTER INDEX PK_ReallyBigTable ON dbo.ReallyBigTable REBUILD WITH (DATA_COMPRESSION=PAGE); EXEC sp_spaceused ReallyBigTable;

| Compression Level | Actual size (KB) | Previous Estimate (KB) | Variance from Estimate |

|---|---|---|---|

| NONE | 1556496 | 1547360 | 0.6% |

| ROW | 850192 | 1711280 | 101.3%% |

| PAGE | 639624 | 1452264 | 127.0% |

Well, I was right–those were some terrible estimates. How can they be off by more than double?

Why are the estimates so bad?

Estimated compression savings are estimates–they aren’t intended to be exact. Like with any estimate, it’s somewhere between a guess and a calculation. That calculation/guess is only going to be as good as the data it’s based on.

The sp_estimate_data_compression_savings procedure works by collecting a sampling of data, compressing it, and extrapolating the results. The procedure’s algorithm is roughly:

- Create a temp table called

#sample_tableDBA05385A6FF40F888204D05C7D56D2B(yes, that exact name is hard-coded into the procedure) that matches the specified table in schema & compression - Insert a sample of data from the specified table into the temp table. If the table (the actual row data: clustered index or heap) is less than 5000 pages, then use a 100% sample, else calculate the sample percent using the formula:

100 * 5000 / used page count - The exact rows of the sample is determined by the undocumented table-valued function

sys.generate_table_sample_ddl(), which is hidden away in the resource database. sys.generate_table_sample_ddl()simply uses theSELECT...FROM [table] TABLESAMPLE(N percent);to return that sample- Create the specified index(es) on the temp table

- Check the size of the sampled temp table & it’s indexes

- Compress with the specified compression level

- Get the newly compressed size of the table & indexes

- Extrapolate the sample size/results out to the full table results

- Clean up temp objects

I have two big problems with this algorithm:

- The sampling is unscientific, and you aren’t able to influence sample size. In my example, the table is 3,120,936 KB, or 390,117 pages. Using the formula above, that is 1.28%. If you’re familiar with statistics in SQL Server, then you probably know that under-sampling can cause performance problems, and selecting an ideal sample size can be a complicated task. Similarly, a low-sampled compression estimate may not represent your data well, and can result in bad estimates.

- The pre-compression sizes are based on current index size, but the post-compression sizes are based on ideally-rebuilt indexes. This makes for an apples-to-oranges comparison when trying to judge your compression savings. To do an apples-to-apples comparison, you really want all size comparisons to be based on freshly rebuilt indexes.

How do I estimate space savings?

I don’t estimate. I test it for real.

My ideal way to test compression space savings is to restore the database to a non-production server, then perform index rebuilds at NONE, ROW, and PAGE compression levels. Then I can use the actual space savings to compare my current compression setting to the other two, and calculate real-world results. Having the full database restored in non-Production, with the changed compression level will also allow me to do some performance testing. I want to see how the changed compression settings affect both my index/statistics maintenance jobs, and the standard workload that uses the table. Compression introduces CPU overhead on both reads & writes, so you’ll want to make sure you test that aspect, and weigh performance impact against size benefit.